SeasonDepth: Cross-Season Monocular Depth Prediction Dataset and Benchmark under Multiple Environments

Update Log

Introduction

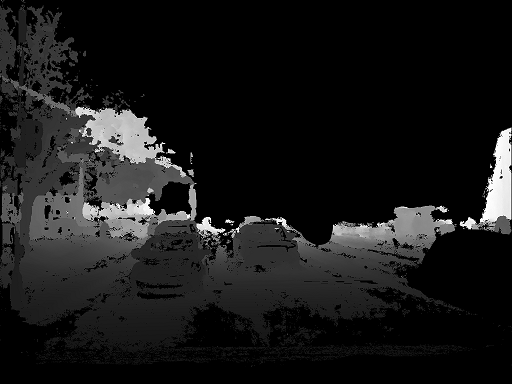

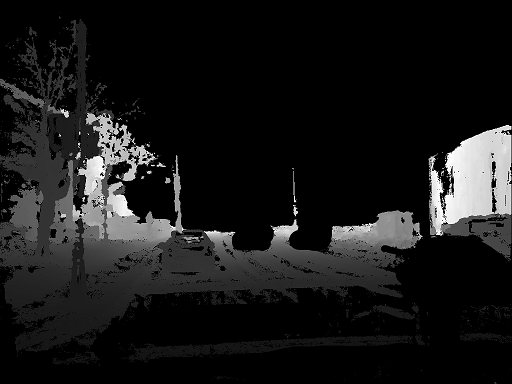

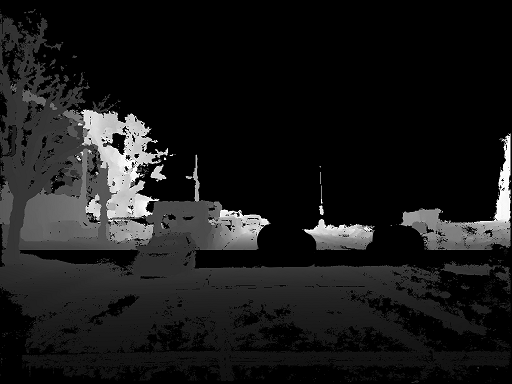

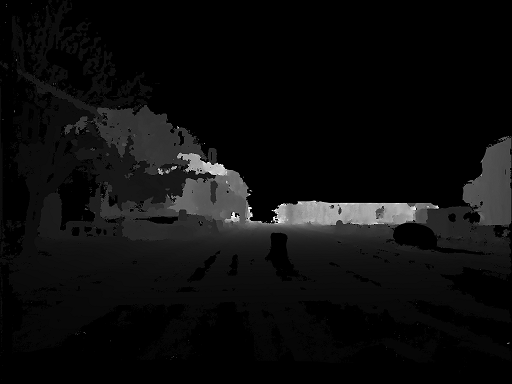

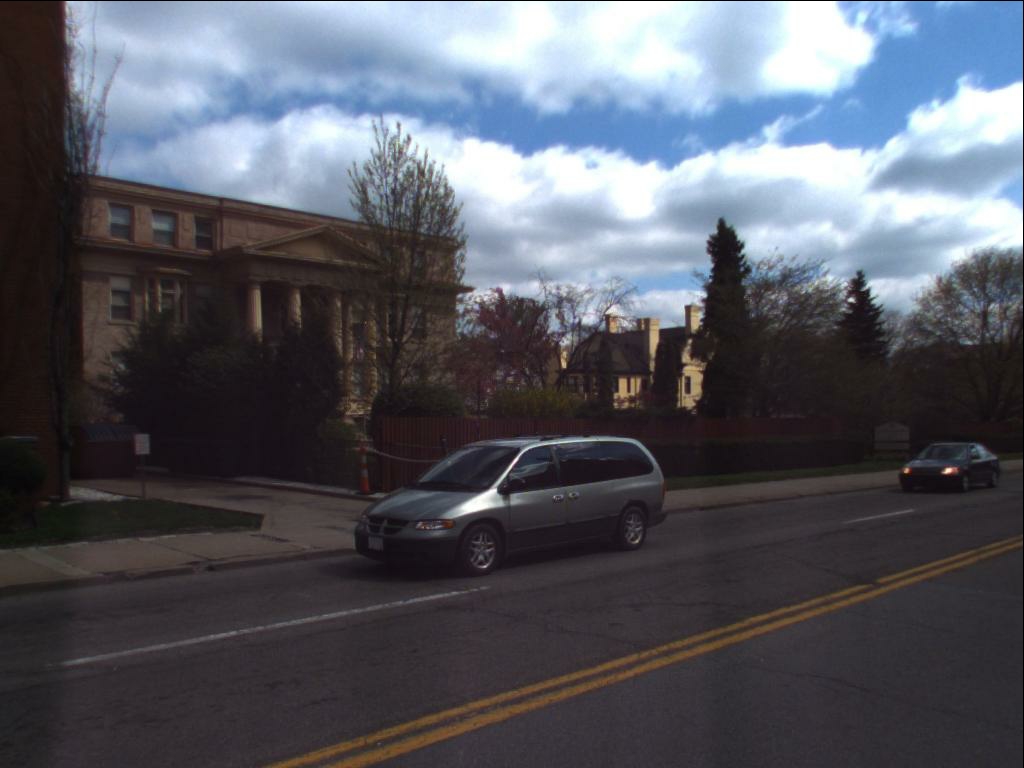

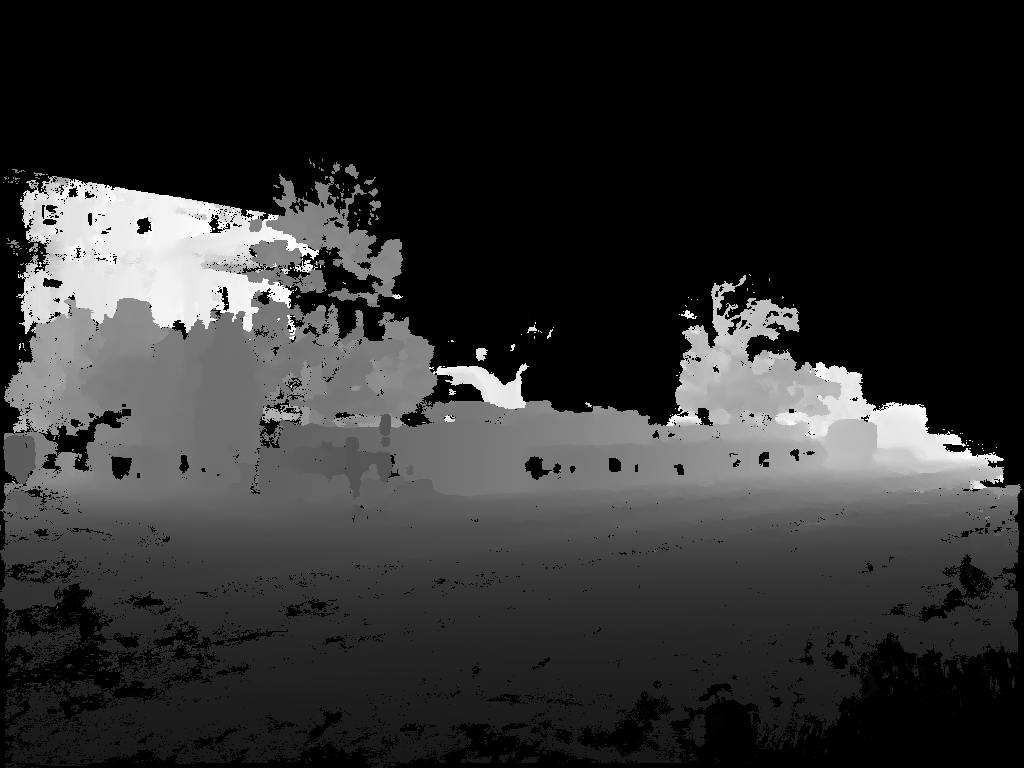

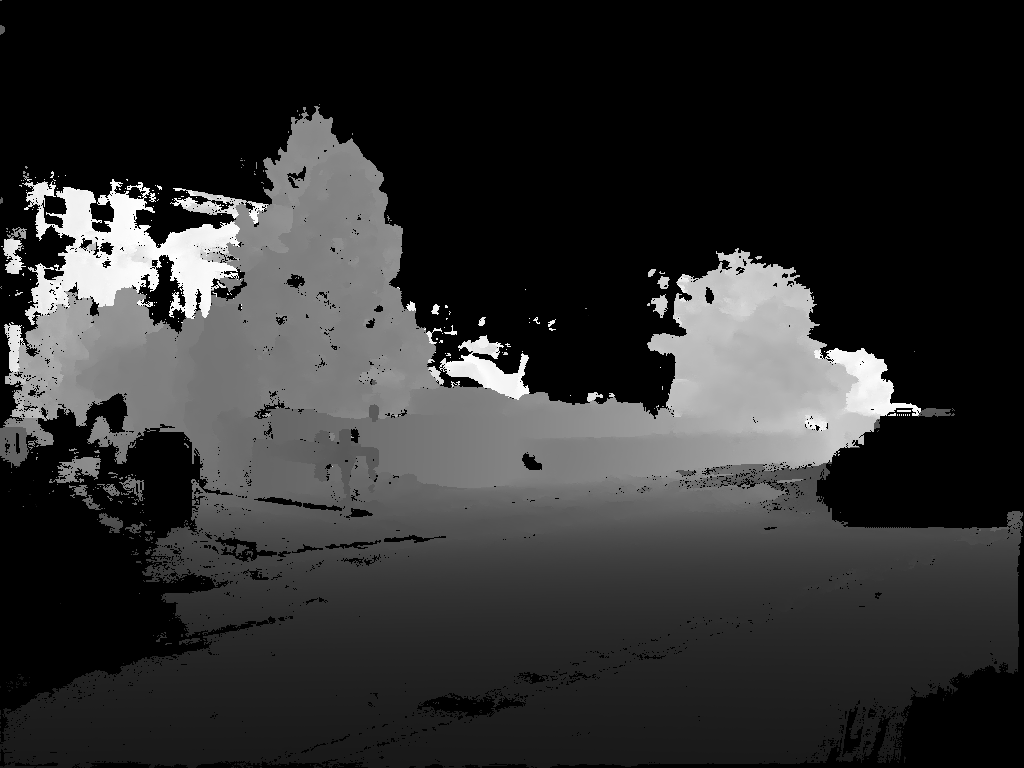

SeasonDepth is a monocular depth prediction dataset that contains multi-traverse outdoor images from changing environments. It is the first depth prediction dataset with multi-environment road scenes to benchmark the depth prediction performance under different environmental conditions. See our arXiv for more details and check out the toolkit repo for SeasonDepth benchmark. The dataset is built through structure from motion based on CMU Visual Localization and CMU-Seasons dataset.

SeasonDepth Dataset

Dataset Download

The test set of SeasonDepth Prediction Challenge test set has been released here for leaderboard. Previously, we have released the validation splits of SeasonDepth for zero-shot evaluation, including RGB images and depth maps under twelve different environments. The released dataset is placed in long-term preserved figshare with persistent identifier Digital Object Identifier (DOI) of 10.6084/m9.figshare.14731323. The validation set is available HERE. The training set of v1.1 has a DOI of 10.6084/m9.figshare.16442025 and is available HERE together with the fine-tuned models on that, feel free to follow BTS and SfMLearner repos to fine-tune or evaluate them for benchmark. The detailed format and structure of validation and training set can be found HERE.

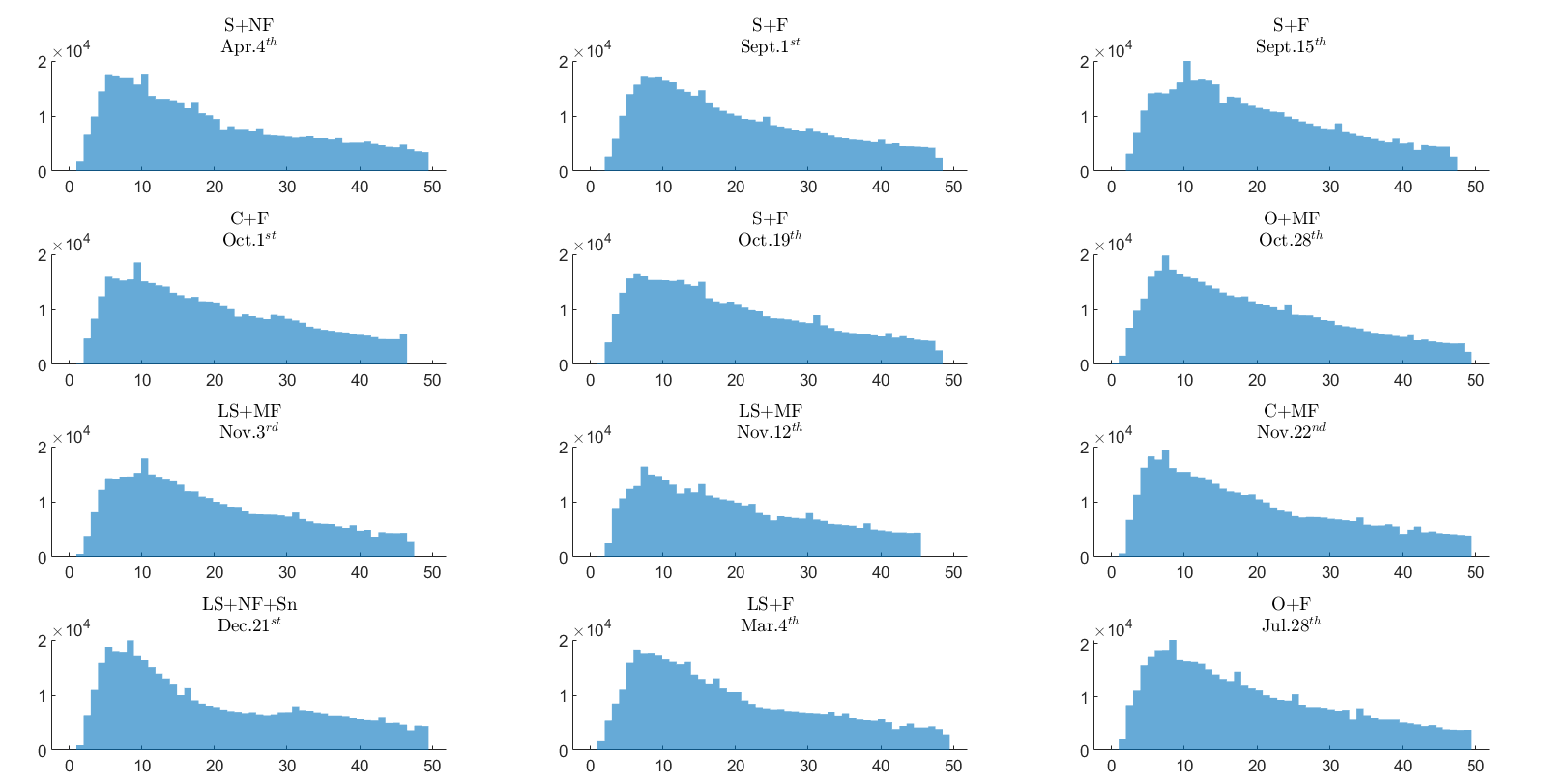

Dataset Statistics

The distributions of relative depth value for all the environments are shown below after mean quartile alignment.

Dataset Details

The detailed information about partitioning, structure layout and image format can be found HERE.

SeasonDepth Benchmark

Baseline Evaluation Results

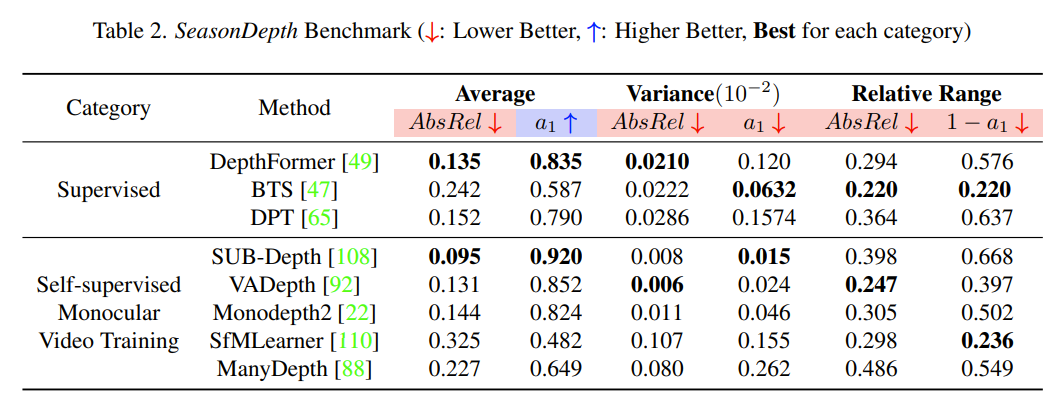

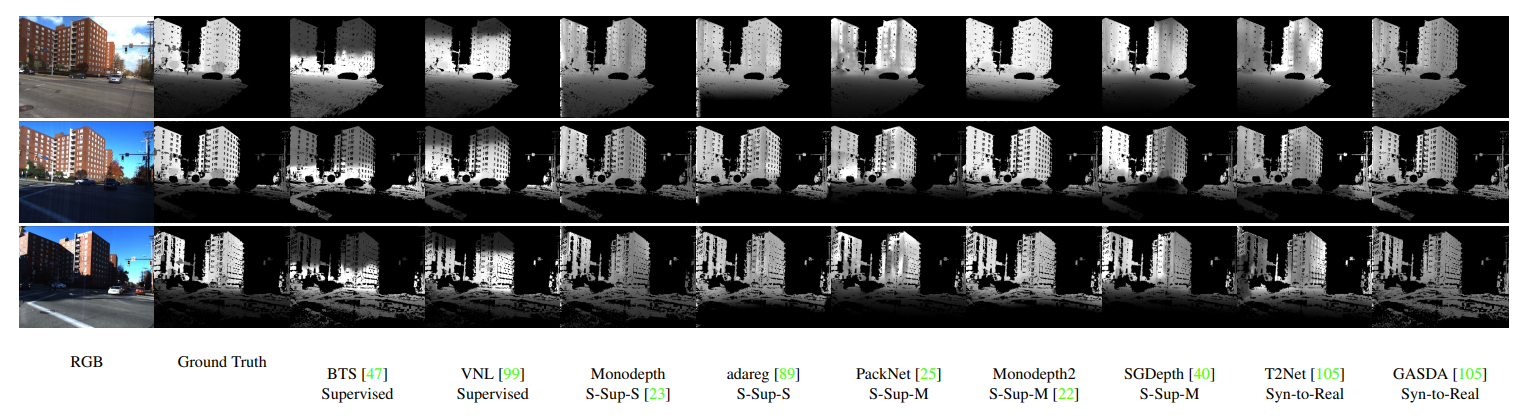

Some baselines on SeasonDepth test set are evaluated below. The full leadboard of the test set can be found here with more models.

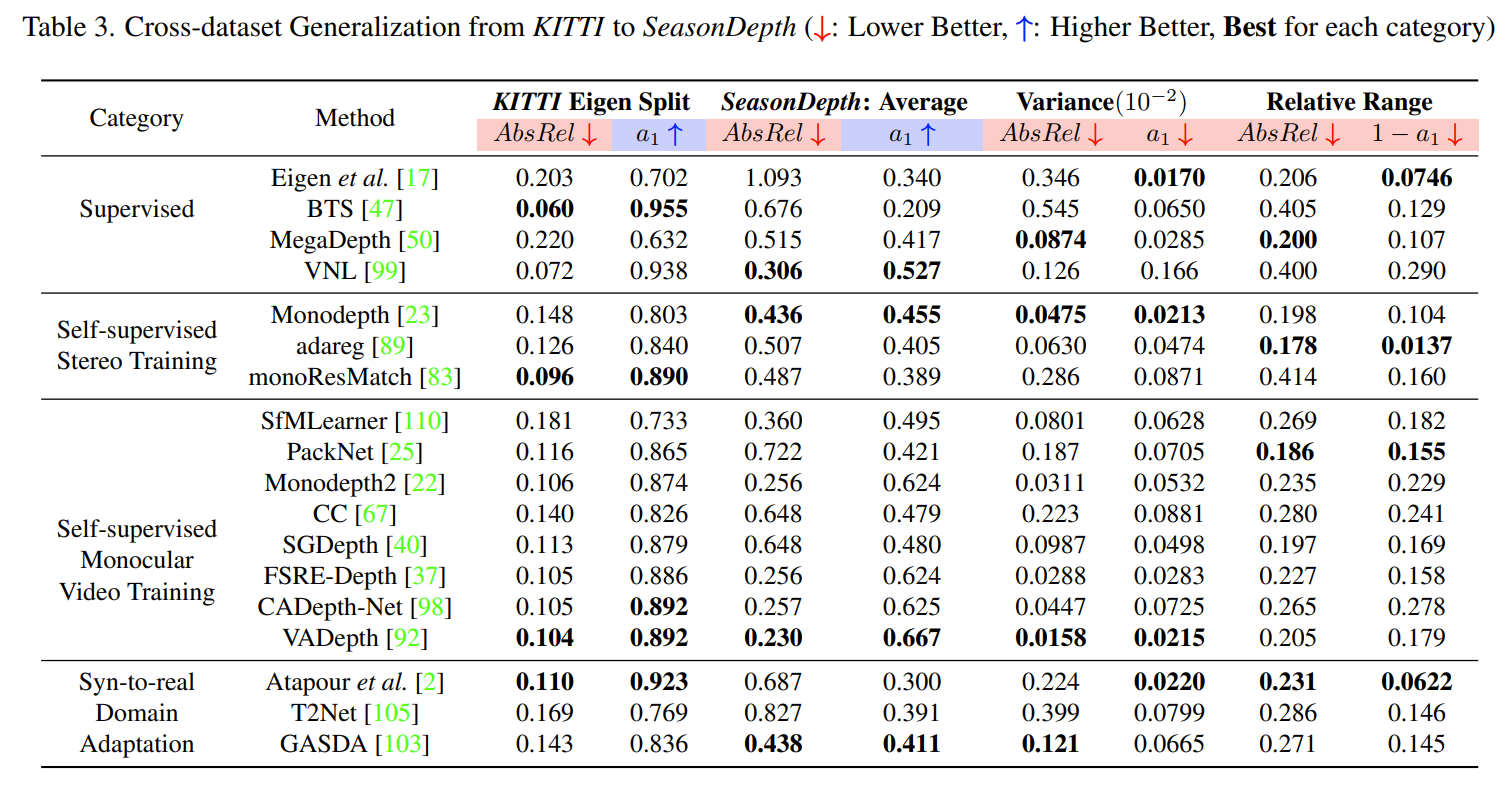

Also, we present the cross-dataset performance of monocular depth estimation from KITTI to the SeasonDepth dataset over our validation set. Please refer to our paper for more details.

Benchmark Toolkit

Please visit our official repository for SeasonDepth benchmark toolkit.

License

The SeasonDepth dataset, the toolkit code and fine-tuned models are under the license of BY-NC-SA 4.0.

Citation

If you use the SeasonDepth dataset please cite all of the three references:

@article{SeasonDepth2023hu,

title={SeasonDepth: Cross-Season Monocular Depth Prediction Dataset and Benchmark under Multiple Environments},

author={Hu, Hanjiang and Yang, Baoquan and Qiao, Zhijian and Liu, Shiqi and Zhu, Jiacheng and Liu, Zuxin and Ding, Wenhao and Zhao, Ding and Wang, Hesheng},

journal={2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year={2023},

organization={IEEE}

}

@inproceedings{Sattler2018CVPR,

author={Sattler, Torsten and Maddern, Will and Toft, Carl and Torii, Akihiko and Hammarstrand, Lars and Stenborg, Erik and Safari, Daniel and Okutomi, Masatoshi and Pollefeys, Marc and Sivic, Josef and Kahl, Fredrik and Pajdla, Tomas},

title={{Benchmarking 6DOF Outdoor Visual Localization in Changing Conditions}},

booktitle={Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2018},

}

@inproceedings{badino2011visual,

title={Visual topometric localization},

author={Badino, Hern{\'a}n and Huber, Daniel and Kanade, Takeo},

booktitle={2011 IEEE Intelligent Vehicles Symposium (IV)},

pages={794--799},

year={2011},

organization={IEEE}

}

Contact

If you have any questions, please contact us through seasondepth@outlook.com.

Last updated: Nov. 21st, 2022